Multimodal AI refers to systems that can understand and generate information across multiple types of data, including text, images, audio, and video. Unlike traditional AI models that work with a single modality, multimodal models are designed to process and integrate information from several sources at once.

This enables more accurate reasoning, richer interactions, and broader application across real-world tasks.

From GPT-4's ability to handle voice and visual input, to applications in healthcare diagnostics, robotics, and media generation, multimodal AI is quickly becoming central to how machines perceive and respond to the world.

Understanding Multimodal AI: Definition and Basics

Multimodal AI systems combine different types of data, such as text, images, audio, and video within a single model. A dedicated encoder first processes each input. Transformer-based language models typically handle text. Images are processed using convolutional neural networks or vision transformers. Audio is often encoded using spectrogram-based models or waveform transformers.

Once each input is encoded into a vector representation, the model maps these vectors into a shared latent space.

This shared space allows the system to learn relationships across modalities. For example, it can link a caption to an image or match a spoken phrase with relevant video content. Attention mechanisms are often used to align and integrate information across these inputs in a coherent way.

Multimodal AI enables more flexible and context-aware outputs. A single model can perform tasks such as describing images in natural language, answering questions about video content, or generating responses based on a combination of text and visuals.

Learn more about multimodal AI agents.

How Multimodal AI Differs from Traditional AI

The foundation for multimodal AI was laid by early work in image captioning and visual question answering. Notable progress began with models like Show and Tell (Google, 2015), which combined convolutional networks with recurrent language models. Research at FAIR (Facebook AI Research) and OpenAI further advanced the field with architectures that were trained on paired text-image datasets at scale.

CLIP (Contrastive Language–Image Pretraining), released by OpenAI in 2021, marked a major turning point. CLIP learned visual concepts directly from natural language supervision, allowing it to generalize to a wide range of image classification tasks without fine-tuning.

This was followed by DALL·E, Flamingo by DeepMind, and more recently, GPT-4 with vision capabilities.

These developments reflect a shift from task-specific pipelines to general-purpose models that can handle multiple input types within a unified framework.

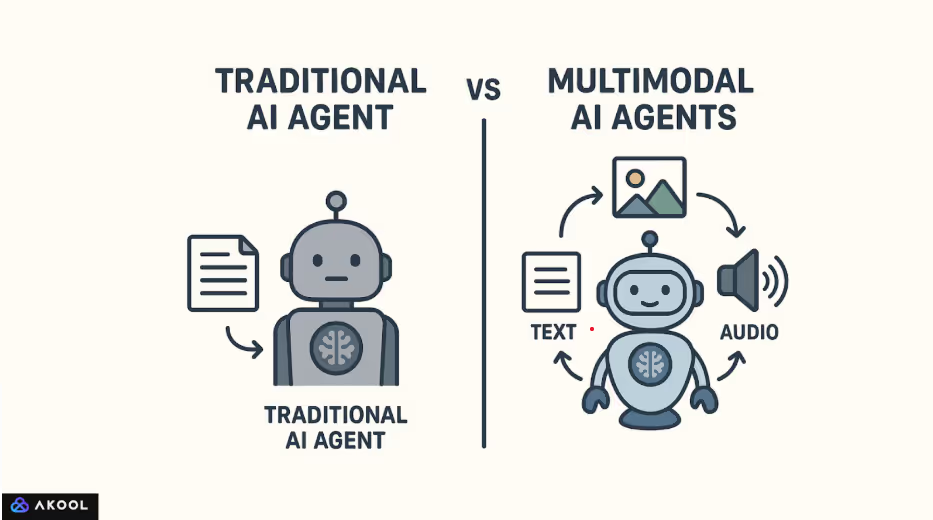

Traditional AI models are typically designed to process a single type of input. For example, a language model like GPT-2 is trained only on text, while a convolutional neural network like ResNet is optimized for image classification.

These models operate within isolated domains and cannot naturally interpret or relate information across different modalities.

Multimodal AI, by contrast, is built to process and combine multiple types of data within a single system. It does not treat text, images, or audio as separate streams but instead encodes them into a common representational space. This shared space allows the model to draw connections between modalities.

For example, it can associate an image with a caption, match a sound with a visual scene, or respond to spoken language with a generated video frame.

How Multimodal AI Works: Technical Components

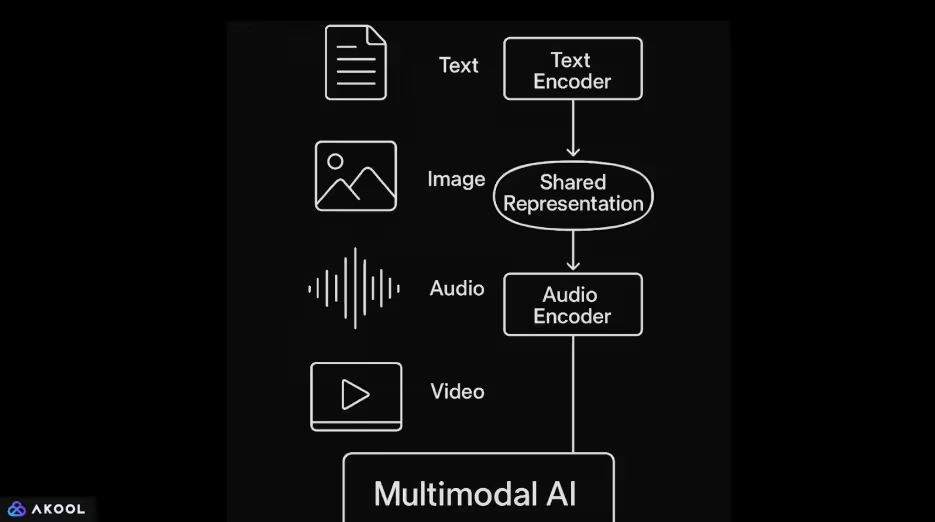

Multimodal AI works by enabling a single system to process, align, and reason across multiple types of input data, such as text, images, audio, and video.

Rather than treating each data type in isolation, it creates a shared understanding by translating each modality into a form that can be compared, combined, and used jointly in downstream tasks.

1. Encoding Each Modality

The process begins with encoding raw inputs into structured representations:

- Text is encoded using transformer-based language models that capture semantics and syntax.

- Images are processed through convolutional neural networks or vision transformers to extract visual features.

- Audio is converted into spectrograms or handled directly as waveforms using specialized encoders like Wav2Vec or AudioMAE.

- Video involves both spatial and temporal processing, often using 3D CNNs or time-aware attention mechanisms.

Each encoder transforms the input into a high-dimensional vector that captures its most relevant features.

2. Mapping to a Shared Representation Space

The encoded vectors are projected into a shared latent space, where information from different modalities becomes comparable. This space is trained so that semantically similar content, such as an image and its caption, lies close together. Models like CLIP achieve this by using contrastive learning, which pulls matching pairs together and pushes non-matching pairs apart.

This shared space is the core enabler of cross-modal understanding. It allows, for example, a model to retrieve an image based on a text query or generate text based on visual input.

3. Cross-Modal Alignment and Fusion

Once encoded, the information from different modalities is integrated through attention mechanisms and fusion layers. This is where the model learns to align relevant parts of each modality—for example, linking a word in a sentence to a specific region in an image.

Fusion strategies vary by architecture:

- Early fusion combines inputs before any deep processing.

- Late fusion merges outputs after each modality is independently processed.

- Intermediate (joint) fusion is the most effective and commonly used, enabling rich interactions at multiple levels of the model.

4. Joint Reasoning and Output Generation

With aligned multimodal representations, the model can perform tasks that require understanding of all input types together. It can generate a textual description of an image, answer questions about a video, or carry out spoken dialogue grounded in visual context.

A decoder or task-specific head transforms the fused representations into outputs. In generative multimodal models like GPT-4o or Flamingo, the decoder is typically a transformer trained to produce sequences of text, conditioned on both language and non-language inputs.

Most multimodal systems use two phases:

- Pretraining: Large-scale datasets with paired modalities (like image-text or video-text) are used to learn general-purpose representations. This phase is often self-supervised.

Fine-tuning: The model is then adapted to specific tasks using smaller, supervised datasets. Some newer models support zero-shot or few-shot learning directly, without further training.

Learn more about synthetic media.

Popular multimodal AI models

1. CLIP (Contrastive Language–Image Pretraining) – OpenAI

Released: 2021

Modalities: Text and image

Key Features:

- Trained on 400 million image-text pairs scraped from the internet.

- Learns a shared embedding space for images and text using contrastive learning.

- Can perform zero-shot image classification by matching image embeddings with natural language labels.

Impact: Set a new standard for flexible vision-language tasks without fine-tuning.

2. DALL·E / DALL·E 2 – OpenAI

Released: 2021 / 2022

Modalities: Text to image (generative)

Key Features:

- Generates images from natural language prompts.

- DALL·E 2 improved resolution, realism, and editing capabilities.

Impact: Opened the door to creative AI tools and showed how text can control image generation precisely.

3. Flamingo – DeepMind

Released: 2022

Modalities: Text, image, video

Key Features:

- Designed for few-shot learning on multimodal tasks.

- Combines a frozen vision encoder with a pretrained language model and cross-attention layers.

Impact: Demonstrated strong performance across multiple vision-language benchmarks with very little task-specific training.

4. PaLM-E – Google Research

Released: 2023

Modalities: Text, image, robot sensor input

Key Features:

- Embeds real-world robot control input into a large language model (PaLM).

- Enables robots to follow multimodal instructions, like "go to the kitchen and look for a red mug."

Impact: Showed how language models can be grounded in physical environments and embodied agents.

5. GPT-4 with Vision (GPT-4V) / GPT-4o – OpenAI

Released: GPT-4V (2023), GPT-4o (2024)

Modalities: Text, image, audio, video

Key Features:

- GPT-4V introduced image input to GPT-4.

- GPT-4o ("omni") integrates all modalities natively, including real-time voice input and output.

- Single model processes and generates across modalities.

Impact: Moves toward real-time, unified AI assistants with vision, speech, and text understanding.

6. Kosmos-1 / Kosmos-2 – Microsoft Research

Released: 2023

Modalities: Text and image

Key Features:

- Combines vision and language through grounded understanding.

- Kosmos-2 introduced object grounding for spatial reasoning.

Impact: Focused on knowledge grounding and vision-language reasoning for general intelligence tasks.

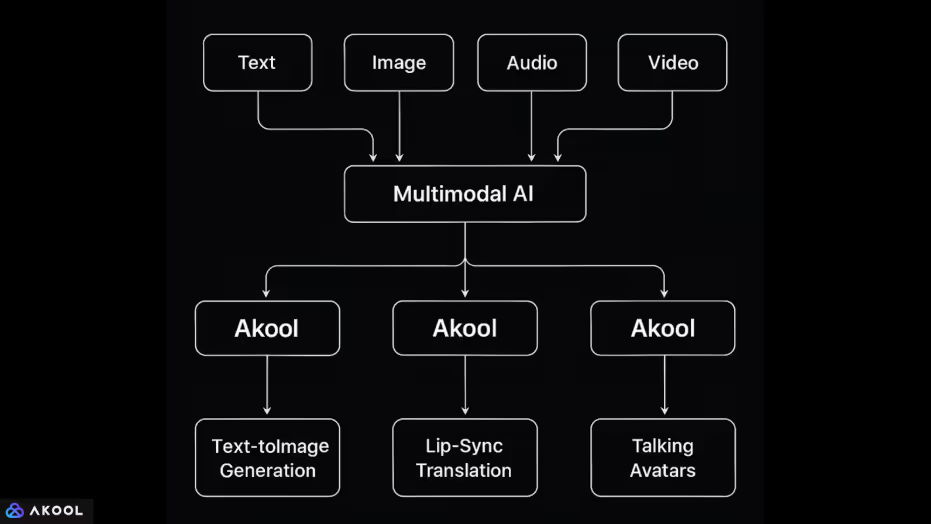

How Akool Uses Multimodal AI

Akool is a platform that integrates multiple data modalities: text, image, audio, and video, within a single AI-driven environment.

This integration allows it to perform complex media tasks that require the model to understand and generate across different input types. Here’s how each modality is handled and combined:

1. Text + Image

- Text-to-image generation: Users can enter prompts to generate images. This requires the system to map natural language inputs to visual content using a shared representation space, similar to models like DALL·E or Stable Diffusion.

- Image editing via prompts: Users can modify images (change background, lighting, pose, etc.) using natural language, showing the system’s ability to align text instructions with visual transformations.

2. Image + Audio + Video

- Face swapping and avatar animation: Akool enables users to animate faces in photos using audio input or text scripts. This requires aligning facial landmarks from an image with phoneme-level audio or textual timing, synchronizing movement and expression across modalities.

- Lip-sync translation: The platform can translate spoken audio into another language and modify the speaker’s lip movement in video to match the translated speech. This combines:

- Audio-to-text (speech recognition),

- Text translation (natural language processing),

- Text-to-audio (speech synthesis),

- Video re-rendering (visual editing with motion modeling).

- Audio-to-text (speech recognition),

3. Text + Audio + Video

- Talking avatars: A user can input a script (text), which is synthesized into speech and animated using a virtual avatar. The system maps text to voice, and voice to facial motion, blending natural language generation, text-to-speech, and facial animation.

- Live AI avatars: The Live Camera feature allows users to appear as avatars in video meetings. Here, real-time audio input (speech) and possibly facial video feed (expression) are mapped to a synthetic animated character.