Introduction

The rise of image-to-video creation tools is transforming how creators turn their ideas and static images into lifelike video content. Today’s Top 5 Best AI Video Creators for Image to Video empower professionals and newcomers alike by automating animation, camera movement, and even 3D effects from a single picture. With these platforms, a creator can take a simple photo or concept and generate a dynamic video – no filming or complex editing needed. The following sections review five leading AI video creators, focusing on their image-to-video strengths, key features, typical use cases, and any limitations (like watermarks or clip length). The Top 5 Best AI Video Creators for Image to Video in 2025 are: Akool, Runway, Kling AI,Luma AI and Pika Labs. Each tool brings something unique: from Akool’s 4K quality and free trial, to Runway’s generative models, Kling’s realism, Luma’s 3D depth, and Pika’s cartoon creativity. Let’s dive into the Top 5 Best AI Video Creators for Image to Video and see how they simplify video creation for everyone.

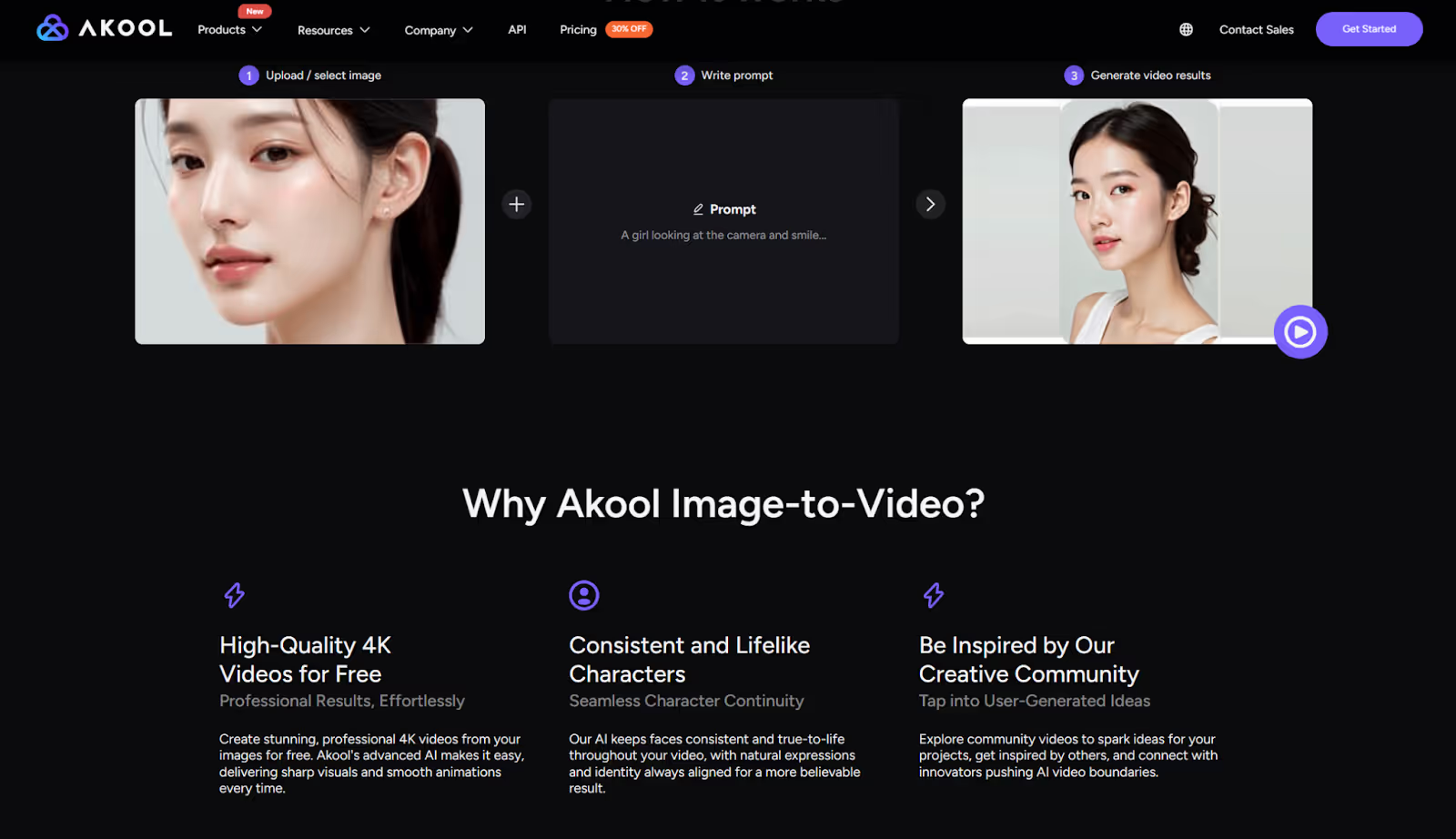

1. Akool — Best All-in-One AI Image-to-Video Platform with 4K Output

Akool’s image-to-video tool can turn any static image into a lifelike video with just one click. This all-in-one AI platform stands out for ultra-realistic results, supporting up to 4K Ultra HD video generation – even on its free tier. In seconds, Akool can animate a portrait or product photo with photorealistic motion and expressions, thanks to its proprietary real-time generation engine. The interface is 100% web-based and beginner-friendly, so no editing experience is needed to bring images to life.

Key Features:

- One-Click Image Animation: Instantly generate a video from any image. Upload a still photo and Akool will automatically create a short clip with movement – for example, making a person’s face blink, smile or talk. No manual animation required.

- Preset Movements & Expressions: Choose from preset animation styles (like talking head, subtle gestures, emotional expressions) to quickly add realistic motion to your image. You can also customize facial expressions, camera angles, or lip-sync dialogue for a truly personalized result.

- High-Quality 4K Output: Akool can render videos up to 4K resolution, delivering sharp visuals and smooth animations. It’s one of the few platforms offering professional 4K output on a free trial basis. This ensures your image-to-video content looks crisp and detailed, suitable for commercial use.

Use Cases: Akool is ideal when realism and quality are key. Creators on social media use it to make portraits speak or sing – for example, animating a selfie or a character artwork to deliver a message or joke. Marketing teams turn product images or mascots into quick promo videos (imagine a still product photo that suddenly rotates and describes its features). Educators have even enlivened historical photos or presentation graphics with subtle motions to engage viewers. In short, Akool’s all-in-one platform handles anything from a talking head virtual avatar to a moving product demo, without needing a camera crew or animation skills. Its free trial (no watermark) and integrated tools (including text-to-speech for voiceovers) make it a top choice for anyone looking to create a complete video from a single image.

2. Runway — Most Creative Image-to-Video Generator for Artists

Runway is a cutting-edge AI media platform known for enabling highly creative image-to-video generation, which makes it popular among artists and designers. It allows you to take an input image (or even just a concept or text prompt) and generate a visually imaginative video clip from it. Runway’s suite of generative AI models (Gen-1, Gen-2, and beyond) can apply styles, animate still images, or even transform entire videos with AI, giving creators a powerful sandbox for experimentation. If you’re looking to turn a digital painting or photograph into a moving piece of art, Runway is one of the Top 5 Best AI Video Creators for Image to Video in terms of creativity and flexibility.

Key Features:

- AI Image-to-Video Generation: Runway can directly convert static images into animated sequences. For example, you can upload an illustration or photo, and Runway’s Gen-2 model will animate it with smooth motion or stylistic effects, effectively “bringing it to life.” This goes beyond simple pans/zooms – the AI can impose creative styles or movements so the output video feels like a new piece of visual art derived from the original image.

- Advanced Editing Tools: Beyond pure generation, Runway includes an integrated AI video editor. It offers features like automatic background removal, object masking, and scene detection. Creators can seamlessly replace backgrounds, add AI-generated effects, or splice together clips. This means after generating a video from your image, you can fine-tune it within the same platform – e.g. apply filters, adjust colors, or incorporate additional images – all with AI assistance.

- Multiple Generative Models: Runway’s platform houses various models for different needs. Gen-1 allows video-to-video transformations (stylizing existing footage using an image or prompt), Gen-2 handles text/image-to-video generation, and newer models keep expanding capabilities. This multi-model approach lets users experiment: you might generate a base video from an image, then use another model to apply a cinematic filter or expand it. The flexibility supports everyone from casual users to ML developers building custom workflows.

Use Cases: Runway is often used by digital artists, filmmakers, and social media creators who want surreal or stylistic videos. For example, an artist can take a static digital painting and have Runway animate it with drifting camera motion and changing light, resulting in an engaging short art film. Social media creators might use Runway to turn a meme image into a funny animated clip with text prompts. Filmmakers and video producers prototype scenes by supplying concept art or storyboards as input images – Runway can generate moving previews of how a scene might look. The tool shines for short-form content (a few seconds to tens of seconds) where creativity is the goal: music videos, experimental visuals, TikTok clips, or background animations for design projects. Its image-to-video prowess combined with editing means one can go from a single frame idea to a polished, shareable video entirely within Runway.

Limitations: Runway’s powerful tools come with some constraints, especially on the free plan. New users get a limited number of credits (around 125) which amounts to only a few seconds of video generation at high quality settings. Longer or multiple generations require a subscription or buying more credits. Also, free outputs will carry a small watermark and lower resolution; to remove the watermark and get full HD or beyond, a paid plan is needed. Another limitation is output length – historically, Runway’s Gen-2 clips were around ~4 seconds by default, though recent updates (Gen-3/Gen-4) have introduced ways to extend this by stitching scenes. Still, generating very long videos is not Runway’s focus (you would create longer videos by editing together shorter AI-generated segments). Finally, like many generative models, Runway’s results can be hit-or-miss: the quality of animation depends on the input image and prompt. Highly abstract prompts or images may produce inconsistent results, so some iteration is usually needed for the best outcome. Despite these limits, Runway remains one of the Top 5 Best AI Video Creators for Image to Video when it comes to artistic potential and innovative features.

3. Kling AI — Highest Fidelity Short Clips from Image Prompts

Kling AI is an advanced video generator known for producing some of the most realistic and high-fidelity AI videos to date. Developed by Kuaishou (a major tech company in China), it burst onto the scene as a rival to models like OpenAI’s Sora, impressing creators with the photorealism of its outputs. Kling can create short video clips from either text or image prompts, and it excels when you want a result that looks like real footage. In fact, early demos were so lifelike that some viewers thought they were real videos. If top-tier visual quality is your priority, Kling AI ranks among the Top 5 Best AI Video Creators for Image to Video.

Key Features:

- Photorealistic Generation: Kling uses a sophisticated AI architecture (a 3D VAE + transformer-based approach) to ensure outputs obey physics and maintain detail. From a single image of a person or scene, it can reconstruct a video with natural-looking motion, expressions, and consistent lighting. The fidelity is evidenced by its benchmark wins – Kling 1.6 Pro ranked #1 globally in image-to-video quality as of March 2025.

- 1080p Resolution & 30 FPS Output: While many AI video tools stick to lower resolutions, Kling generates clips in full HD 1080p at up to 30 frames per second. The results are smooth and detailed – for example, fur on a moving animal or reflections in water are rendered clearly. (4K output is reportedly in testing for future versions.) This high resolution makes Kling’s short videos suitable for professional content or integration into higher-quality productions.

- Advanced Motion Controls: For image-to-video tasks, Kling provides unique control features to tailor the motion. Users can specify a start frame and end frame (i.e. give two images) and Kling will animate a seamless transition. There’s also a “motion brush” tool – you can draw a path on your image and have an object follow that path in the video. These features let you guide the AI, resulting in more precise animations (e.g. ensuring a car in a photo drives in a specific direction or a character walks along a chosen route).

Use Cases: Kling AI is best suited for creators who need ultra-realistic short videos, such as VFX artists, filmmakers, or advertisers prototyping concepts. For example, a filmmaker could feed an image of a concept scene and get back a 5-second video with subtle camera movement and realistic atmosphere, to evaluate how it might look on film. Advertisers might use an image of a product and have Kling generate a cinematic rotation or environment around it, producing a quick product clip without a shoot. Social media creators have also used Kling to generate attention-grabbing clips (a famous example was a panda playing a guitar video generated via Kling that went viral for its realism【42†】). Because Kling can interpret imaginative prompts with physical accuracy, it’s popular for fantasy or sci-fi visuals – e.g. turning concept art of a creature or landscape into a moving scene that feels straight out of a movie. Essentially, when you need a short video that could be mistaken for real footage, Kling is the go-to choice.

Limitations: As a cutting-edge model, Kling AI does have some caveats. Firstly, access was initially restricted – it launched via a Chinese app and required a Chinese phone number, though by 2025 an international web version (Kling 2.0) has become available. New users still face a learning curve and waitlist; the interface, while improving, may not be as straightforward as others because of many options and occasionally Chinese language elements. There are also duration constraints – currently, each generation maxes out around 10 seconds of video. Generating longer content means stitching clips together externally. Kling’s free tier is generous in quality but limited in speed: free users get ~66 credits per day, which may only produce part of a full HD video (full 5–10s clips often require hundreds of credits). Free generations also run slowly (sometimes hours in queue). In terms of output, while usually excellent, Kling can struggle with extremely complex prompts involving precise interactions or many characters – e.g. maintaining consistency of a character across multiple clips, or handling fast action with perfect accuracy (minor physics glitches can occur in complex scenes). Finally, the heavy computation means users need to plan credit usage carefully (a single high-quality clip might consume most of a monthly quota on paid plans). In summary, Kling is a powerhouse for image-to-video realism, but it’s best for short, planned clips and for users willing to navigate its resource demands.

4. Luma AI — Top 3D Image-to-Video Generation Engine

Luma AI is an innovative platform that specializes in transforming images (or sets of images) into videos with a 3D look and feel. If you’ve seen those cinematic animations where a still photo appears to have depth and the camera moves through it (often called the “parallax” effect), Luma automates that process with AI. What makes Luma one of the Top 5 Best AI Video Creators for Image to Video is its foundation in 3D reconstruction technology: it can take flat images and generate videos that simulate a three-dimensional environment, complete with realistic perspective shifts and camera motion. For creators who want to showcase products, landscapes, or any scene with an immersive 3D twist, Luma AI is the go-to choice.

Key Features:

- AI-Powered Image Animation: Luma uses AI to add natural motion effects to your still images. With minimal input, the system might introduce a gentle camera pan, zoom, or orbit around the subject, making the viewer feel like they’re moving within the scene. The transitions are smooth and cinematic – for example, a simple photograph of a city street can turn into a short video where the camera glides forward as if entering the photo.

- 3D Depth and Perspective: A standout feature of Luma is its ability to create an illusion of depth from 2D images. The AI analyzes the image to approximate a 3D structure: what is foreground, what is background. Then, when animating, it adjusts perspective accordingly. This means you get videos where, say, a tree in the foreground and mountains in the background shift relative to each other as the camera moves – providing a 3D parallax effect. If you provide multiple photos of an object or scene (from different angles), Luma can even construct a more accurate 3D model and generate a video sweeping around it, almost like a virtual camera in a real 3D space.

- High-Quality and Optional Interactivity: Luma’s output quality is high, often producing 1080p videos with realistic textures and lighting. It also offers an API and integration for developers or enterprises. Notably, Luma started as a NeRF (Neural Radiance Field) app for capturing real-world objects in 3D. This heritage means advanced users can create interactive 3D views or integrate Luma’s image-to-video generation into apps (for instance, a real estate app that turns property photos into a 3D video tour). For most creators, the web and mobile app interface makes it simple: upload images, choose your motion or effect, and generate the video.

Use Cases: Luma AI is perfect for scenarios where you want to make static visuals more engaging by adding depth. Photographers use Luma to animate still shots – for example, taking a portrait and creating a subtle push-in video that adds drama to the photo. Product showcase is a big use case: e-commerce sellers can input product images and let Luma generate a 360° rotation video or a sweeping zoom that highlights the product’s form. In real estate or architecture, a single photograph of a room can be turned into a short walkthrough video, giving a sense of space to potential viewers. Content creators and marketers love using Luma for social media posts, turning what would be a flat infographic or flyer into a moving 3D story (text or logos can be placed in an image and then animated with depth). Even game designers or concept artists use Luma to prototype scenes – for example, generating a quick fly-through of a concept art landscape to visualize it better. Essentially, Luma AI blurs the line between 2D and 3D, enabling immersive storytelling from ordinary images.

Limitations: While Luma is powerful, users should be aware of a few limitations. The free plan on Luma’s Dream Machine (as of mid-2025) is somewhat restricted: it only allows image-based generations (no text-to-video on free) and outputs are capped at 720p with a watermark. To get full HD 1080p and no watermark, a paid subscription is required. In terms of input, if you supply just a single image, the amount of 3D effect is intelligently guessed by the AI – subtle for realistic scenes. Extremely complex scenes or images with unclear depth might not animate perfectly (you might see some warping at edges when the camera moves a lot). To get a very pronounced 3D result, you often need multiple images or a short video clip input (which Luma’s app can capture and turn into a 3D model). Another limitation is clip length: Luma’s image-to-video clips are usually a few seconds long (ideal for a quick orbit or pan). If you need a longer sequence, you would generate multiple clips or use their keyframe tool in the app to piece together moves, which takes more effort. Finally, because Luma uses heavy AI processing (especially when constructing 3D from scratch), generation can take some time depending on the complexity – not as slow as some, but expect possibly a minute or two for detailed scenes. Despite these, Luma AI remains uniquely positioned in giving 3D flair to image-based videos, solidifying its spot in the Top 5 Best AI Video Creators for Image to Video.

5. Pika Labs — Best Anime-Style AI Image-to-Video Animator

Pika Labs is an AI video generation platform that has gained a reputation for its anime-style and stylized animations. It allows users to create short videos from either text or image prompts, with a particular strength in transforming illustrated or imaginative images into animated clips. If you’re a creator who loves anime, cartoons, or creative visual effects, Pika Labs stands out among the Top 5 Best AI Video Creators for Image to Video for producing those kinds of vibrant, artistic videos. The platform originally launched via a Discord bot and has since developed a web interface, making it easy to go from a static image or idea to a dynamic video with just a prompt.

Key Features:

- Text & Image to Video Generation: Pika Labs supports both text-to-video and image-to-video prompts. You can simply describe a scene or upload an image and let Pika generate a short animated video clip. The outputs are typically a few seconds (about 1–5 seconds long) but packed with detail. For example, feeding in a fantasy character image might yield a brief animation of that character blinking and the background moving, as if it’s a scene from an anime film. This dual input flexibility means you can start with a blank idea or enhance an existing image.

- Multiple Styles & Effects (“Pikaffects”): Pika Labs offers unique creative effects like “Poke It” and “Tear It” which add stylized transformations to videos. These are part of a set of “Pikaffects” and other features (Pikadditions, Pikaswaps, etc.) that allow swapping characters or adding elements into the video via image prompts. Essentially, you can not only animate an image but also composite multiple AI elements. The tool is particularly known for anime and cartoon styles – many creators use Pika to generate anime scenes or game-like cinematic shots, as the engine tends to preserve a drawn or CGI aesthetic nicely. The aspect ratio and duration can be customized too (various common ratios like 16:9, 9:16, 1:1, etc., are supported, and you can choose video length up to ~5 seconds or slightly more with new updates).

- Fast and Accessible: Pika Labs emphasizes quick generation and iterative creativity. There are templates and presets for common tasks, and the interface (including Discord bot commands) allows adding parameters like camera movement, FPS, or guidance strength for advanced control. For most users, though, it’s straightforward: input your prompt/image, optionally select an effect (like “Add Rain” or “Anime filter”), and generate. The free basic plan comes with a number of video credits (around 80–150 per month as of 2025), which is enough to try out many animations. Pika’s community is also a perk – because it started on Discord, there’s a wealth of shared prompts and examples to learn from, fueling creative inspiration.

Use Cases: Pika Labs is beloved by content creators who want to produce stylized animated content quickly. Think of a comic artist who wants to animate a panel of their comic – Pika can take that drawn image and generate a short video where the camera zooms in and the character moves slightly, adding a dramatic effect to the artwork. It’s also used for creating anime-style music videos or GIFs: users might generate a series of 3-second anime landscape clips to accompany a song, for instance. Social media marketers have used Pika to make eye-catching animated posts (imagine a static mascot logo that gets animated with sparkles or movement via Pika). Another use case is prototyping for animation: if you have a character design, Pika Labs can animate it doing a simple action (like waving or turning) to help visualize how it might look in motion. Because Pika supports adding objects into real videos (Pikaswap), video editors use it to experiment with VFX – e.g. taking a real video and replacing an object or person using just an image of something else. From purely creative animations to practical effects, Pika Labs serves a wide range, with a bias toward imaginative, fun visuals.

Limitations: Pika Labs, by design, focuses on short clips – usually 5 seconds or less per generation for most models (though newer versions have extended this to around 10 seconds in some cases). So, it’s not for making a full-length animation in one go; you would need to string together outputs if you want a longer story. The output resolution, while improving, is generally optimized for web/social sharing (often around 720p or 1080p on higher-tier models). Extremely high realism is not Pika’s domain – its strength is stylized content, so if you tried to get a live-action look, you might not get the same fidelity as a tool like Kling. There can be some flicker or consistency issues in outputs (common in AI video generation) – for example, an anime character’s outfit might subtly change between frames. Pika mitigates this with features for “character consistency” (like using the same seed or new features like Pikaframes for keyframing scenes), but it’s still evolving. Another limitation is the credit system: while there is a free tier, complex features or newer models (e.g. Pika 2.1 or 2.2 models) consume more credits (some advanced effects can cost 60 or more credits per video). This means free users might only generate a handful of videos before needing to wait for next month or upgrade. Lastly, because Pika Labs was initially on Discord, new users on the web platform might find the plethora of features and strangely named effects a bit overwhelming at first. It’s wise to start with simple prompts and gradually try the advanced options. Despite these, Pika Labs holds its place in our Top 5 Best AI Video Creators for Image to Video because it unlocks a level of creative animation that few others do – especially for fans of anime and vibrant visual styles.

Conclusion

In conclusion, AI image-to-video tools have significantly simplified the video creation process. What once required skilled animators or videographers can now be achieved by anyone with an idea and a single image. Our exploration of the Top 5 Best AI Video Creators for Image to Video highlights that each platform has its niche: Akool delivers an unparalleled all-in-one experience with realistic animation, 4K output, and even audio integration – truly making it possible to create a full video from a photo.

When choosing among the Top 5 Best AI Video Creators for Image to Video, consider your goal. If you prioritize the highest quality and resolution with a straightforward workflow, Akool is an excellent first stop. It’s free to try, so you can upload an image and see immediate results in 4K quality – a capability rivals often reserve for paid plans. Akool’s all-in-one nature (visuals + voice) means you can literally generate a talking video message from a photograph in one platform, which is a compelling advantage. We highly encourage you to explore Akool first – take advantage of its free trial and experiment with bringing your own photos or ideas to life. Whether it’s for a professional project or a personal creative experiment, Akool can deliver professional-grade, lifelike videos with minimal effort. From there, you can also try the others on our list for more specialized needs (creative styling with Runway, ultra-realism with Kling, etc.). The bottom line is that AI image-to-video tools are empowering creators like never before. So go ahead – pick your favorite AI video creator, upload that image, and watch your visual ideas transform into reality. Your audience will be amazed at how quickly your static content becomes dynamic video, and you’ll be one step ahead in the new era of AI-driven creativity.

.avif)