Brands, film studios, and companies constantly seek innovative ways to scale their content creation and engage with their audiences.

Companies have been using AI to create spokesperson videos and models to capture a new audience while delivering messaging in a compelling and engaging manner.

How are they doing it?

With auto lip sync technology. Now, auto lip sync technology offers a solution to automate and streamline the process of creating lifelike AI avatars and models with perfect lip synchronization.

AI spokespeople and models aren't the only use cases of auto lip sync technology; it's possible to use the technology for movies too.

It's more important than ever to learn how to use this AI technology because you need to avoid getting left behind.

Creating an AI Spokesperson with Perfect Lip Syncing

.avif)

Using AKOOL's auto lip sync feature, brands and companies can create an AI spokesperson with perfect lip syncing by following these simple steps:

First, head on over to AKOOL’s Realistic Avatar platform.

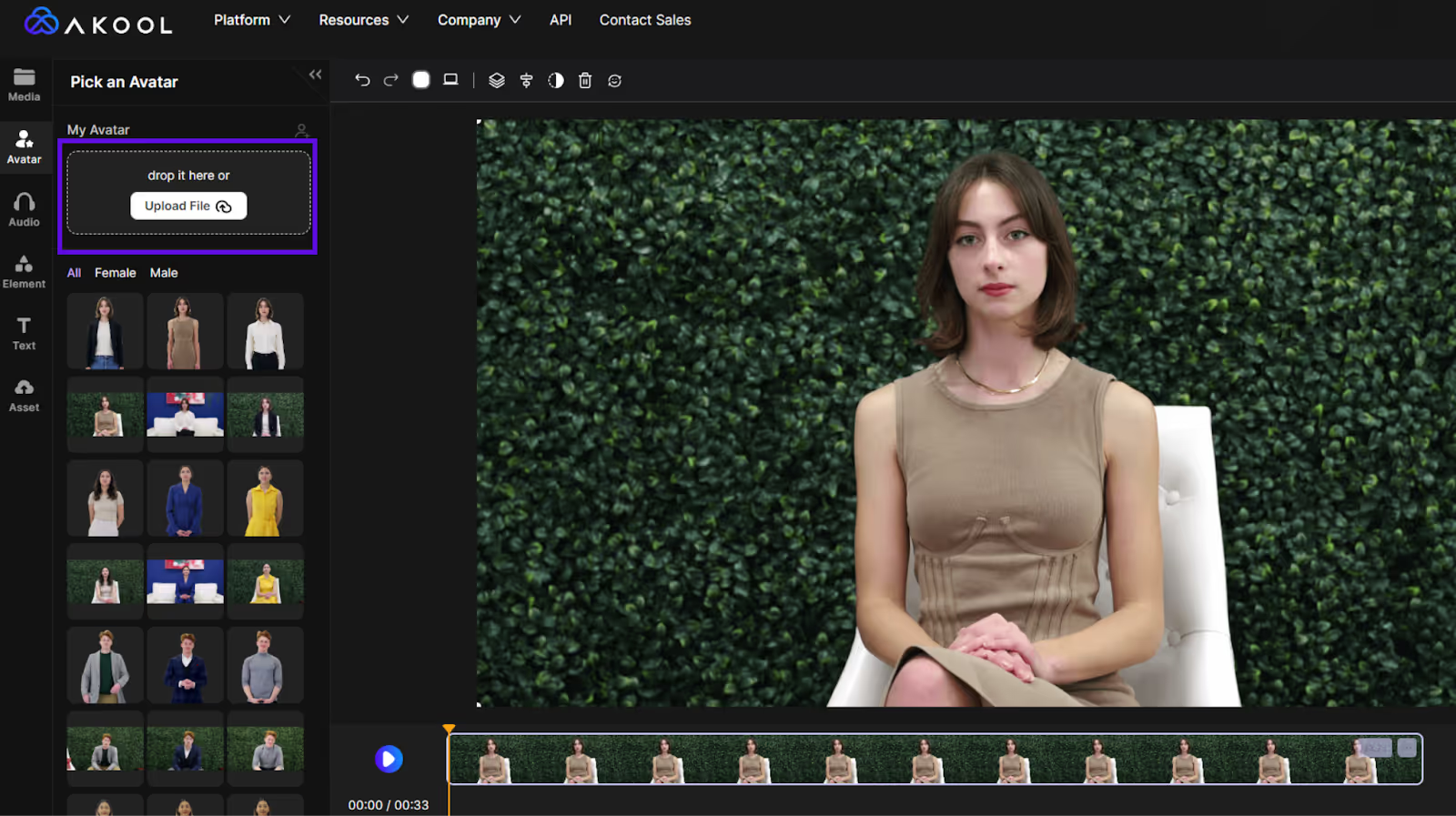

Step 1: Upload Your Avatar

The first step is to upload an image featuring the model, AI avatar or virtual character. You can also use AKOOL’s stock avatars.

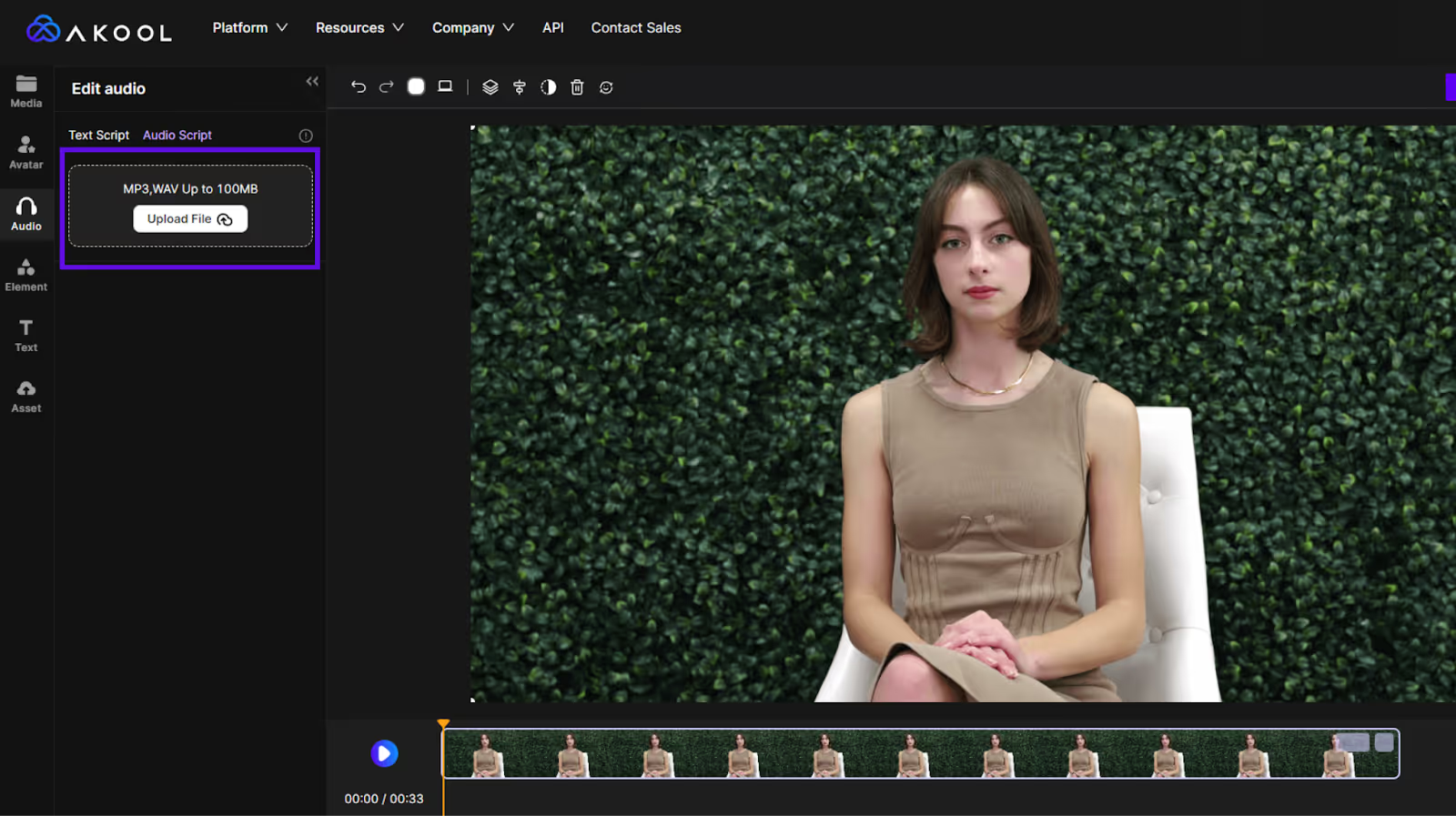

Step 2: Upload an Audio File or Insert a Text Script

Next, you can upload an audio file or insert a text script.

Step 3: Click Generate Premium Results

When everything is all set with your avatar and audio files, click “Generate Premium Results” on the top right-hand corner.

AKOOL's AI-powered algorithms will then analyze the audio waveform and phonetic information, mapping the lip movements to the corresponding speech patterns. This process leverages advanced machine learning techniques to ensure accurate and natural-looking lip sync results.

Using Auto Lip Sync for Video Localization

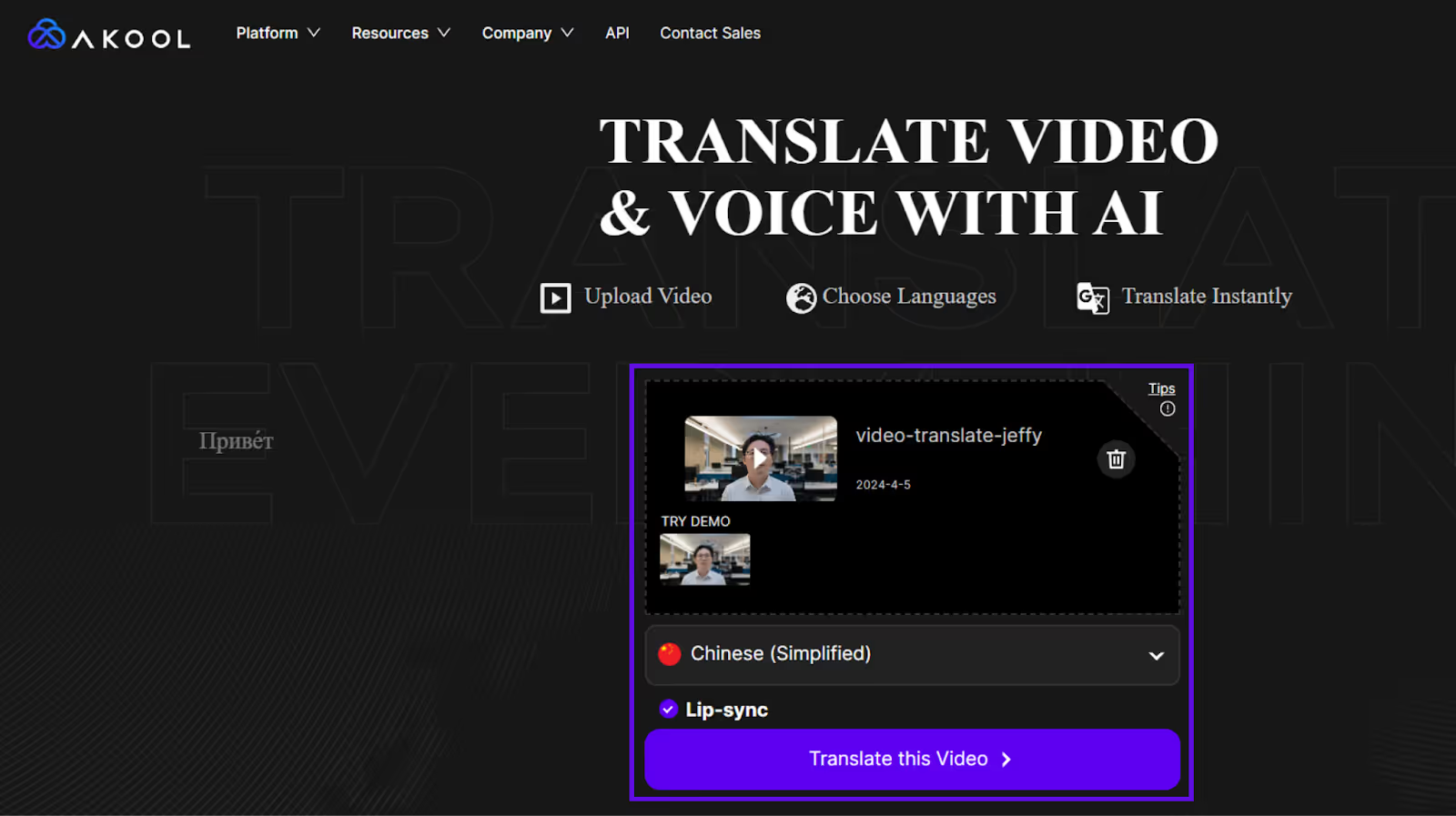

AKOOL also has a tool that allows you to seamless translate a video and auto lip sync with a plethora of languages in just a few clicks of a button.

You just have to upload your video and select a target language, then click on "Translate This Video" and wait for the results!

For example, let’s say there’s a product demo video in English, but you want to tap into the market in China. Well, you can just upload that video and then select the target language.

Then, just make sure “Lip-Sync” is checked and click “Translate this Video.”

Once you've done that, you would just have to wait for the results and you’ll have a translated and auto lip-synced video!

You can even do this for movies. Rather than having dubbed videos where the audio and mouth movements don’t sync up, you can upload clips of movies and translate them with auto lip sync. However, keep in mind, you’ll have to upload the videos in clips and combine them together.

Understanding Auto Lip Syncing for AI Spokespersons

Auto lip syncing refers to a process in which the lip movements of a model, avatar or virtual character are automatically synchronized with the corresponding audio or speech.

Auto lip sync uses advanced algorithms to analyze the audio waveform and phonetic information, generating realistic lip movements that closely resemble human speech patterns.

The benefits of using auto lip sync for AI spokespersons, here are a few that are at the top of the list:

- Realism and Natural Lip Movements: Using auto lip sync tools can result in lip movements that look highly realistic and natural. This is particularly useful when creating an AI spokesperson—or even a movie—as it ensures that the avatars appear engaging and lifelike to viewers.

- Consistency across Multiple Videos/Presentations: By automating the lip sync process, brands can maintain consistent and cohesive lip movements across multiple videos or presentations.

- Time and Cost Efficiency: Manual lip syncing can be a time-consuming and labor-intensive process—especially for longer videos or complex dialogue. Auto lip sync technology reduces the time and effort required, resulting in cost savings for brands and companies.

Limitations of Manual Lip Syncing

Before auto lip sync technology, creating realistic lip movements for videos relied heavily on manual techniques. However, this approach comes with several limitations:

- Difficulty in Achieving Accurate and Natural Lip Movements: Even for skilled animators, achieving perfectly natural and accurate lip movements that precisely match the audio can be challenging. This is especially difficult when dealing with subtle nuances of speech, different accents, emotional expressions, or complex dialogue sequences.

- Lack of Consistency across Different Videos/Presentations: Relying on manual techniques makes it problematic to maintain consistent and cohesive lip movements across multiple videos or presentations featuring the same spokesperson. Even small variations in the animation process can lead to noticeable inconsistencies in the lip sync, disrupting the overall experience.

- Scalability and Flexibility Challenges: Manual lip syncing becomes increasingly difficult and time-consuming when dealing with large volumes of content or the need to update or modify the spokesperson's lip movements frequently.

- Potential for Human Error: Since manual lip syncing is a painstaking process that requires significant attention to detail, there is a higher risk of human error creeping in, such as misaligned lip movements or inconsistencies in the animation quality.

- High Costs: Employing skilled animators or visual effects artists to manually lip sync characters can be costly, especially for longer or more complex projects, making it less accessible for companies or organizations with limited budgets.

Introducing Auto Lip Syncing Tools and Software

There are now software solutions available to overcome the limitations of manual lip syncing and meet the increasing demand for realistic AI spokespersons. These solutions offer advanced auto lip sync capabilities tailored specifically for AI avatars and virtual characters.

One of these tools is AKOOL's suite of auto lip sync tools that employ cutting-edge machine learning algorithms to generate highly accurate and lifelike lip movements for AI avatars.

This technology aims to simplify the process of creating engaging and believable AI spokespersons, enabling brands and companies to deliver their messaging in a compelling and immersive way.

Advantages of Auto Lip Synced Videos

By leveraging auto lip sync technology to create AI spokespersons or translate videos, brands and companies can enjoy numerous advantages:

- Improved Brand Engagement and Customer Experience: A realistic and engaging AI spokesperson can help capture audience attention and create a more immersive and memorable experience, ultimately improving brand engagement and customer satisfaction.

- Consistency in Messaging across Multiple Platforms/Campaigns: With auto lip sync, brands can ensure consistent and cohesive messaging delivered by the same AI spokesperson across various platforms and campaigns, reinforcing brand identity and recognition.

- Cost and Time Savings Compared to Traditional Spokespersons: Creating and maintaining an AI spokesperson with auto lip sync can be more cost-effective and time-efficient compared to hiring and managing traditional human spokespersons or actors.

- Versatility and Scalability: AI spokespersons can be easily adapted and scaled to deliver messaging in multiple languages, accents, or styles, providing brands with greater flexibility and reach for their marketing and communication efforts.

- Breaking Into New Markets: Video localization plays an important role in facilitating global reach. With AKOOL's Video Translate tool, you can seamlessly translate a video and tap into new markets.

Real World Use Cases of AI Auto Lip Sync

Several forward-thinking brands and companies have already embraced the power of auto lip synced AI spokespersons, leveraging this technology to create engaging and memorable experiences for their audiences. Here are a few real-world examples:

- Alba Renai, an AI model and spokesperson, has been used by a large television company in Spain to drum up demand and views. Here’s a look at how the AI model was used to create a promotional video for the Survivor series.

- Lay’s used AI auto lip sync to allow people to share “personalized” messages from Lionel Messi.

- Dove has also used AI auto lip sync to create a marketing campaign.

These examples demonstrate the versatility and effectiveness of auto lip synced AI spokespersons across various industries and use cases, showcasing their potential to create compelling and engaging experiences for audiences.

There are a number of ways you can use auto lip sync for content creation, here are a few we thought of:

- Multilingual and Localized Content: As we mentioned, auto lip sync technology can be a powerful tool for video localization, enabling brands to create culturally relevant content for diverse global audiences. By automating lip sync for different languages and accents, companies can ensure consistent and authentic messaging while minimizing the cost and effort associated with traditional localization methods.

- Educational and Training Content: In industries such as healthcare, finance, or technology, creating educational and training content is essential for upskilling employees, customers, or clients. Auto lip sync can be used to create realistic AI instructors or virtual trainers that deliver engaging and informative content with natural lip movements, enhancing comprehension and retention.

- Brand Storytelling and Advertising: Storytelling is a powerful tool in marketing, and auto lip sync technology can bring brand narratives to life in a visually compelling way. Brands can craft captivating stories and advertisements featuring AI avatars or virtual characters as spokespersons, delivering messaging with realistic lip movements that amplify emotional resonance and brand recall.

- Product Launches and Demonstrations: Introducing a new product or service to the market is a critical moment for any brand. With auto lip sync, companies can create an AI spokesperson or virtual character to deliver engaging and informative product demonstrations or launch presentations. The AI avatar's lifelike lip movements will captivate audiences and ensure that key product features and benefits are communicated effectively.

Ethical Considerations for AI Spokespersons

Although AI spokespersons offer several advantages and opportunities, it's crucial to address potential ethical concerns associated with this technology. One of the significant considerations is the possibility of AI impersonation or deception, where AI avatars could be used to mislead or deceive audiences by representing themselves as real individuals.

To mitigate these concerns, brands and companies can prioritize transparency and clearly communicate that their spokespersons are AI-generated avatars.

Key Factors in Lip Sync Benchmarking

To accurately assess the performance of individual tools, it’s critical to use a set of objective criteria—and key performance indicators—to understand which platform produces the best results. At AKOOL, we use the following criteria to measure the success of lip sync benchmarking:

Accuracy: How well does the tool sync the original video with the new audio? The best tools produce hyper-realistic videos that look like they were originally shot in the target language.

Speed: How fast is the lip syncing process? AKOOL can create professional-grade videos in a matter of minutes, without sacrificing quality or realism.

Customization: Does the platform provide users with the ability to customize their videos? AKOOL allows users to select up to 30 different languages, dialects, speaking patterns, and voices.

In addition, users should also assess the realism of facial movements, the quality of the video itself, and the cost of the platform.

Comparative Analysis of Lip Sync Tools

AKOOL

AKOOL has rapidly become known as one of the best lip sync tools on the market. The cutting-edge platform allows users to create hyper-realistic, professional-grade lip matching videos that outperform the competition and resonate with users. In particular, users rave about the platform’s lip syncing accuracy, incredibly fast processing time, and the wide range of customization options.

Funimate

Funimate is one of the most popular lip syncing apps available today. The platform offers users a wide range of customization options and editing tools. These include special effects and custom filters. However, Funimate has a relatively slow processing speed and our lip matching benchmarking tests show that the quality of output is lower than AKOOL’s, leaving viewers with a less immersive viewing experience compared to other platforms.

Triller

Triller is a major name in the world of lip syncing and is used to craft funny memes and video shorts for social media platforms like TikTok and Instagram Reels. While the platform is incredibly fun to use and offers a wide variety of filters and editing options, many enterprise users remark that the quality is not suitable for professional use. The platform struggles with realism and is best suited for personal social media use, rather than high-impact marketing campaigns.

Case Studies: Real-World Performance

Multilingual Advertising

We tested each of these tools in a head-to-head lip matching benchmark test to see which platform can produce the highest quality videos in a new language. We found that AKOOL was able to produce the best result, with the most accurate translation and realistic lip syncing. Triller was a close second in the test; however, many of the facial movements were slightly off—resulting in a poorer user experience and broken immersion.

Animated Production

On this lip-matching benchmark test, Funimate was able to outperform Triller in several key areas, including realism, lip sync quality, and immersion. However, AKOOL still produced the highest quality output of the three platforms—outperforming both Funimate and Triller in realism, video resolution, and view immersion across each sample.

Social Media Shorts

Each of the three tools measured in this lip matching benchmark test is capable of producing a high-quality video for social media. Triller and Funimate both produced quality videos that matched a user’s new audio track with an existing video. However, AKOOL’s output is consistently more realistic and provides a better user experience than the competition. That’s because AKOOL does a better job of syncing facial movements with the new audio and produces a higher-resolution video that simply looks better on modern devices.

Your B2B & B2C Teams Can Make Better Lip Sync Videos!

The results of our lip matching benchmark test are clear: while there are several platforms capable of creating passable lip syncing videos, AKOOL is simply a step above the competition. The cutting-edge platform is capable of creating a realistic video in dozens of popular languages, allowing enterprise marketing teams and advertising agencies to create engaging content that can resonate with audiences across the world.

AKOOL outperformed the competition in several key areas, including accuracy, customization options, processing speed, and video quality. Novice users can select from a variety of popular tools to create social media shorts and funny memes. However, companies intent on creating professional-grade marketing campaigns and content that will provide an immersive experience for their customers should look to AKOOL for their next lip sync project.