Introduction

The rapid growth of online and blended learning has universities seeking new ways to create engaging video content at scale. AI tools now allow an educator to simply input a script and instantly get a polished video with a virtual presenter.

This means instructors can save time on recording and editing, while delivering content that’s consistent, engaging, and scalable to large or remote student cohorts. Crucially, these platforms also support multilingual delivery – a single professor’s lesson can be automatically generated in various languages, greatly expanding accessibility for international students. In short, AI video generators are reshaping higher ed by combining speed, scalability, and personalization, letting universities meet the growing demand for video-based learning without overburdening faculty.

1. Synthesia – Popular Text-to-Video Avatar Studio

Synthesia is one of the most recognized names in AI video generation and has become a go-to tool for creating professional-looking videos from plain text. Using Synthesia, an educator can simply type a script, choose a lifelike AI presenter (avatar), and generate a video of that avatar delivering the lines. The platform’s claim to fame is its extensive library of avatars and languages – over 140 diverse AI presenter avatars are available, and it supports video creation in 120+ languages. This breadth makes Synthesia especially attractive for universities looking to localize content for students around the world.

Synthesia will then produce a polished video with a realistic presenter, without any need for cameras or video editing skills. Many organizations use Synthesia for corporate training and marketing, and it has also gained popularity in e-learning content creation for its reliability and output quality.

Key Features:

- Large Avatar & Voice Library: Synthesia provides a huge selection of virtual presenters of different ages, ethnicities, and styles (business formal, casual, etc.), plus an array of voice options. It supports 120+ languages with native-like pronunciation, allowing one to easily create multilingual course videos.

- High-Quality, Consistent Output: The videos from Synthesia have a polished, studio-quality feel. The avatars are among the most realistic in appearance and movement. The platform also offers fast rendering, often generating a video in minutes – useful when you need to update lecture content quickly.

- Templates and Screen Recording: Synthesia includes pre-made templates for common scenarios (like instructional modules, training snippets) to speed up content design. It even has a screen recorder integration, so an educator can capture a screencast (e.g. a software demo or slideshow) and have an avatar narrate it – great for tech tutorials or walk-throughs.

- Team and Brand Features: Designed with enterprise in mind, Synthesia allows team collaboration (multiple faculty or staff can work on videos), and easy branding with logos and custom backgrounds. Universities can also commission custom avatars – for instance, having a specific professor or a virtual university ambassador created as a unique avatar (though this is a premium enterprise feature). API access is available for institutions that want to integrate Synthesia’s video generation into their own systems or LMS.

Limitations: Synthesia’s sophistication comes with some caveats. Notably, there is no fully free tier – new users can try a one-time demo video, but to create real videos you must subscribe to a paid plan. The pricing can be a bit high for individual educators, and the lower-tier plans limit the number of video minutes per month. Another limitation is customization: you are largely limited to the avatars and animations that Synthesia provides. While those are high-quality, you cannot deeply customize an avatar’s appearance or gestures (unless you pay for a custom avatar service). Also, Synthesia does not offer voice cloning for regular users – you must use the built-in AI voices for narration, which means a professor can’t easily make the avatar speak in their own voice (unless an enterprise arrangement is made). Finally, some users note that although avatars are very good, a few can seem slightly stiff for very emotive expressions – Synthesia continually improves this, but the avatars are best for a neutral, professional tone.

Use Cases: Synthesia is well-suited for creating standard course videos and training modules at scale. For example, an instructor can generate a series of micro-lectures with avatars, saving themselves from being on camera for each topic. This has already been adopted in e-learning – teachers use Synthesia to produce lecture videos with a virtual presenter, avoiding the need to film themselves but still providing a face for students to see. Another use is in creating multilingual course materials: a university media team could make an English instructional video, then quickly regenerate it in Spanish and French using Synthesia’s language options, to better serve diverse student groups.It may not have the real-time interactivity or voice-clone personal touch that Akool offers, but for pre-recorded lectures, training, and presentations, Synthesia remains a top contender that delivers quality and scale.

2. Akool – Real-Time Avatar Platform for Education Videos

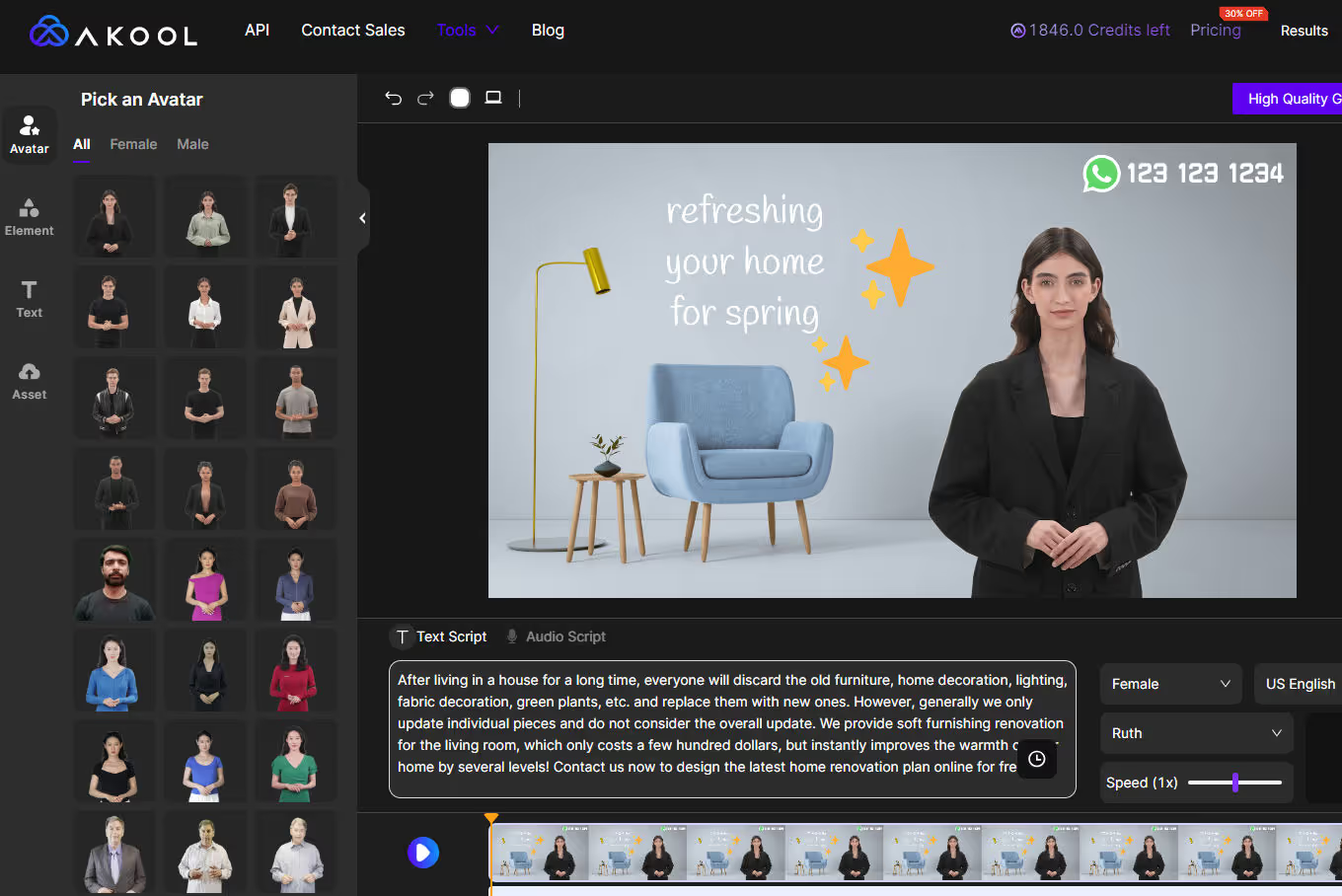

Akool is an all-in-one AI video generation platform that tops our list for 2025. Its standout feature is real-time interactive avatars – lifelike digital presenters that can be driven live or pre-recorded to deliver lectures and announcements. In practical terms, Akool allows a university to create a “digital professor” avatar that speaks any script instantly, even live in a Zoom class or webinar, thanks to Akool’s streaming avatar technology.

The avatars are highly realistic, with rich facial expressions and gestures, effectively bridging the gap between a virtual presenter and a human instructor. Akool also offers powerful multilingual capabilities and voice cloning, enabling an instructor to generate the same video lesson in dozens of languages – potentially with the professor’s own cloned voice for authenticity.Despite its advanced features, Akool maintains an easy, no-code interface: educators can simply type a script, choose from 80+ avatar presenters (or even create a custom avatar of themselves), and generate the video. The result is a high-quality lecture or tutorial video that can be produced in minutes instead of days.

Key Features:

- Real-Time Streaming Avatars: Akool’s avatars can present live and respond in real time. This is ideal for interactive virtual lectures or Q&A sessions – a professor’s digital twin can appear live with natural gestures and expressions. This immediacy sets Akool apart from tools that only offer pre-rendered videos.

- Multilingual & Localization: Akool supports dozens of languages. You can create one video and automatically get versions in, say, English, Spanish, Chinese, etc., within minutes. This built-in translation/localization is invaluable for universities with international students or multilingual curricula.

- Voice Cloning: The platform can clone a user’s voice. A professor could have their avatar speak in their own voice, making the AI-generated lectures feel personal and familiar to students. Alternatively, universities can clone a signature voice (e.g. a notable narrator) for all their materials.

- Seamless Integrations: Akool provides APIs and plugins to integrate AI video into existing workflows. For instance, an Akool Live Camera plugin lets your avatar join live classes or meetings on platforms like Zoom. It also supports LMS integration for embedding videos into course platforms.

Limitations: Akool offers a free trial with some restrictions (e.g. limits on video length and output quality). Fully unlocking features like 1080p/4K resolution and faster rendering requires a paid plan. However, even the trial is useful for testing its capabilities. Another consideration is that the richness of Akool’s real-time features may come with a learning curve for new users who want to leverage live avatars or API integrations. That said, the platform is designed to be user-friendly, and many educators will find the basic “type and generate” workflow intuitive.

Use Cases: Akool’s flexibility makes it ideal for numerous academic scenarios. Faculty can create digital lecturers or TA avatars to deliver course content, freeing up time on recording lectures. Notably, educators have used Akool to build digital teachers that present lessons in the instructor’s cloned voice, saving time preparing video lectures. Language departments or international programs can benefit from the instant translation: for example, create an English lecture and let Akool output French, Arabic, and Japanese versions for global learners. Administrative offices might use Akool avatars for student orientation videos or FAQ tutorials (imagine a friendly virtual guide explaining campus services around the clock). The ability to appear live via avatar also opens up creative possibilities – a guest speaker avatar could “visit” multiple classes simultaneously, or a professor on leave could still interact with students virtually in real time. With its combination of real-time interaction and high-quality output, Akool stands out as a leader for institutions aiming to scale teaching through AI while keeping a human touch.

3. HeyGen – Versatile Video Maker with Custom Voices

HeyGen (formerly known as Movio) is another popular AI video platform that makes video creation as easy as making a slideshow. Like Synthesia, it’s a text-to-video system: you enter your script, pick an AI avatar and voice, and generate a video of a talking presenter. HeyGen’s unique strengths lie in its simplicity and some creative features tailored for business and education use. One standout capability is the option to upload your own voice recording to create a custom AI voice for the avatars.

In essence, this is a lighter form of voice cloning – for example, a professor could record a sample of their voice, and HeyGen will create a voice that the avatar can use, preserving the instructor’s tone. Another strength of HeyGen is its support for multi-scene videos: you can break your script into segments and have different backgrounds, slides, or even different avatars for each scene.

Key Features:

- Wide Voice & Language Selection: HeyGen comes with 300+ AI voices spanning many accents, genders, and styles in more than 40 languages. This means educators can find a voice that closely matches their preference or their audience’s locale. Combined with the multilingual support, it’s straightforward to localize a video for global learners.

- Custom Voice Avatar: A signature feature – the ability to create a custom voice by uploading a recording of your own voice. The system generates an AI voice that sounds like you, which avatars can then use. For a university, this is great for maintaining authenticity; e.g., an instructor’s avatar can carry their real voice, or a department can have all videos use a particular narrator’s voice for consistency.

- Face Swap & Creative Tools: HeyGen includes a face swap feature, where you can take someone’s face (from a photo) and map it onto an avatar. While more of a novelty for education, this could be used to create a quick avatar of a specific person (with permission) or for fun internal videos. HeyGen also lets you add background music from its library to make videos more engaging.

- Scene Composition & Templates: Unlike single-shot avatar tools, HeyGen enables multi-scene compositions. You can create a sequence: for example, Scene 1 with an avatar introducing a topic, Scene 2 showing a slide or image (with voiceover continuing), Scene 3 the avatar returns for a summary. This storytelling ability makes academic content more dynamic – essentially turning a PowerPoint into a narrated video. There are ready templates for common structures, so faculty can drag-and-drop scenes easily.

Limitations: While HeyGen’s avatars are quite realistic, some fine details are a notch below Akool’s ultra-expressive avatars or Synthesia’s huge variety. The face swap results can be hit-or-miss – if the source photo’s lighting or angle doesn’t match the avatar, the outcome may look unnatural. Importantly, HeyGen’s free version is limited: exported videos carry a watermark and have strict length limits. In practice, this means serious use of HeyGen will require a paid plan, especially for longer lectures or unbranded content. Lastly, the built-in editing is focused on scene arrangement; for complex video edits or animations beyond what the scenes allow, you might need to download the video and use another editor. For most lecture-style content, however, HeyGen’s tools are sufficient.

Use Cases: HeyGen is a solid choice for instructors and staff who want to quickly assemble lecture snippets, course introduction videos, or promotional content. A professor could use HeyGen to create a short course overview video: start with their avatar (using the professor’s own voice via the custom voice feature) greeting students, then switch to a scene with key bullet points or images about the syllabus, and conclude with the avatar encouraging students to reach out with questions. Additionally, universities might use HeyGen for internal communications: a friendly avatar can deliver campus news or training to staff, with scenes mixing in charts or photos as needed. Overall, HeyGen offers a flexible, slide-based approach to AI video that many in academia will find approachable, with the bonus of maintaining one’s own voice in the final product.

4. Pictory – Automated Text-to-Video for Course Content

Pictory takes a different approach to AI video generation – instead of focusing on talking human avatars, it specializes in turning written content into videos with voiceovers, captions, and stock visuals.

Pictory comes with a large library of over 10 million royalty-free images and video clips to illustrate content. You can choose from various realistic AI voices for narration, or even upload your own recorded narration if you prefer. The interface is beginner-friendly – you get a storyboard of scenes that you can adjust (for example, edit the text, highlight certain words, or change the background visual). For university use, Pictory can be a boon for quickly creating course recap videos, learning modules, or promotional materials without any filming or complex editing. It’s essentially a fast content creation engine: one that is particularly useful when you want to convert text-heavy material into an audiovisual format to better hold students’ attention.

Key Features:

- Script-to-Video Conversion: Simply feed in text (or a webpage) and Pictory’s AI will generate a video with that text broken into digestible captions, matched with relevant visuals and transitions. This is ideal for transforming lecture transcripts or research papers into summary videos. The AI does the heavy lifting of finding imagery and creating a coherent visual narrative, saving educators a lot of time.

- Automatic Video Summarization: Pictory can also take a long video (say, a recorded lecture or a webinar) and automatically extract key highlights to create a shorter summary video. This feature is useful for making “previous class in review” snippets or condensing a 1-hour guest lecture into a 5-minute highlights reel for students.

- Extensive Stock Media Library: The platform provides access to a vast library of stock images, video clips, and music tracks (on higher-tier plans, up to 12 million assets). This ensures that for almost any topic, Pictory can find relevant visuals. For example, a history professor’s script about World War II might automatically pull appropriate historical photos or footage. All media is royalty-free and integrates seamlessly.

- AI Voiceovers and Editing: Pictory includes a built-in AI voice generator with a range of voices and accents to narrate your videos. The voices are quite natural-sounding (and you can choose male/female, different tones). You can also tweak timing, add pauses, or emphasize words to make the narration more effective. Additionally, Pictory’s editor lets you trim scenes, adjust the text captions, and even automatically remove filler words or silences if you upload your own footage. In essence, it doubles as a simple video editor optimized for text-based editing.

Limitations: Pictory’s focus on “faceless” videos means it does not generate a talking human avatar. If your goal is to have a presenter on screen, Pictory isn’t the tool for that – it’s more akin to an automated video slideshow creator. The voice customization is limited compared to other tools: you can choose from the provided voices, but you can’t fine-tune the emotion or intonation much, and true voice cloning of a specific person’s voice isn’t available. Regarding free usage: Pictory offers a free trial/plan that allows up to 3 video projects of up to 10 minutes each (and outputs are limited to 720p with a small watermark). For ongoing use or higher quality (1080p/4K, longer videos, more stock content, etc.), a paid subscription is needed. Finally, Pictory’s videos are best for informational or explainer content; they might not have the high entertainment polish or dynamic motion that some other AI video tools (or a real editor) could achieve, since Pictory doesn’t do complex animations beyond slide transitions.

Use Cases: Pictory shines when faculty have text-based content that they want to quickly turn into engaging video learning materials. For instance, an instructor can take a written lesson summary or an article and use Pictory to generate a short video recap for students – complete with captions (useful for accessibility) and voiceover. This can cater to students who prefer video learning or need a quick refresher before exams. Pictory is also handy for creating MOOC or online course content on a budget; a course creator can transform their course notes into bite-sized videos for each module without filming anything. Pictory has been noted as a useful tool for educational professionals to create course content quickly, especially thanks to its easy AI voiceovers and automation. In summary, Pictory is not about photorealistic avatars, but it is excellent for rapidly producing informative video content from textual materials – a valuable capability in higher ed where time and resources are often limited.

5. Runway – Generative Video for Creative Learning Content

Runway (often referred to as Runway ML) is at the cutting edge of AI video generation, but in a different way from the avatar-centric tools above. Rather than creating talking head videos, Runway enables users to generate creative video clips from text, images, or other videos using generative AI models. Additionally, Runway includes powerful AI editing tools: you can remove backgrounds without a green screen, erase or replace objects in a video, or apply stylistic filters (imagine turning a real video into a cartoon style) all with AI assistance. This platform is cloud-based and collaborative, meaning multiple users (like a teacher and students) can work on a project in a browser simultaneously. While Runway isn’t designed for making lecture videos with narration, it opens up possibilities for academic creativity and visual explanation – especially in fields like design, media arts, or any discipline that can benefit from visual simulations and experiments.

Key Features:

- Multi-Modal Video Generation: Runway lets you create videos from different types of inputs. You can do text-to-video (enter a written prompt and get a short video), image-to-video (upload a picture and the AI will animate or transform it), or even video-to-video (give it an existing video and apply a new style or make variations). This flexibility allows for a lot of creative experimentation in a classroom – from visualizing concepts to creating art.

- Advanced Generative Models: The platform uses state-of-the-art generative AI models that produce fairly coherent and detailed results for short clips. The latest models can keep a generated scene consistent for around 3–8 seconds, which is impressive for AI-generated content. For example, you could generate a quick clip of a historical battle scene or a chemical reaction to supplement a lecture.

- AI-Powered Video Editing: Runway includes tools that leverage AI for editing tasks that normally require time or skill. You can remove a video background with one click (useful for isolating a presenter or object), erase unwanted objects or people from footage, and do style transfer (make a video look like it’s painted, or change day to night, etc.). This makes Runway not just a generator but a handy post-production suite for educational video projects.

- Collaboration and Export: Since Runway is cloud-based, it doesn’t require special hardware – any laptop with a browser works. Multiple team members can collaborate on a project in real time, which is great for student group projects or remote teamwork. When videos are ready, you can export them in various aspect ratios and formats, helpful for sharing on different platforms (e.g. embedding in slides vs posting on a class site).

Limitations: Runway’s generative magic is currently best suited for short clips. By design, the AI can typically generate only up to about ~16 seconds of video per prompt. For longer content, you would need to stitch together multiple clips, which can be time-consuming and may result in inconsistent style unless carefully managed. Speaking of credits, Runway uses a credit-based pricing model. You get a certain amount of generation credits on paid plans (and some free credits to start), but complex tasks or lots of generation can eat through them quickly, and unused credits may not roll over month to month.

Use Cases: Despite its limitations for long-form content, Runway is a fantastic tool for creative and visual projects in academia. Educators in design, film, or animation programs can use Runway as a teaching tool – students can prototype short films or effects with AI assistance, exploring concepts in generative art. In more traditional subjects, Runway can help illustrate abstract or hard-to-visualize concepts. For universities fostering innovation, Runway offers a glimpse into the future of AI-generated media that students and faculty can start exploring now.

Conclusion

AI video generation tools are rapidly maturing, and universities stand to gain tremendously by incorporating them into teaching and content creation. Among these, Akool emerges as a leader for university use cases, due to its unique blend of live avatar technology, voice cloning, and seamless translation which directly address the needs of a global, digital-first education environment.

Professors can maintain their personal presence via digital avatars, reach wider audiences in any language, and even engage students in real time using Akool’s platform – all of which elevates the learning experience without proportional increase in effort. As we move further into 2025, embracing such AI tools can help universities stay ahead in delivering scalable, inclusive, and innovative education.

Try Akool’s free trial to experience real-time avatars and global scalability for higher education.

.avif)