1)Introduction

Image-to-video (I2V) models transform a single still image into a moving clip, adding motion, depth, and camera direction with AI. In 2025 they matter because video now drives attention across every channel, and I2V makes high-quality motion possible without full shoots, crews, or complex timelines.

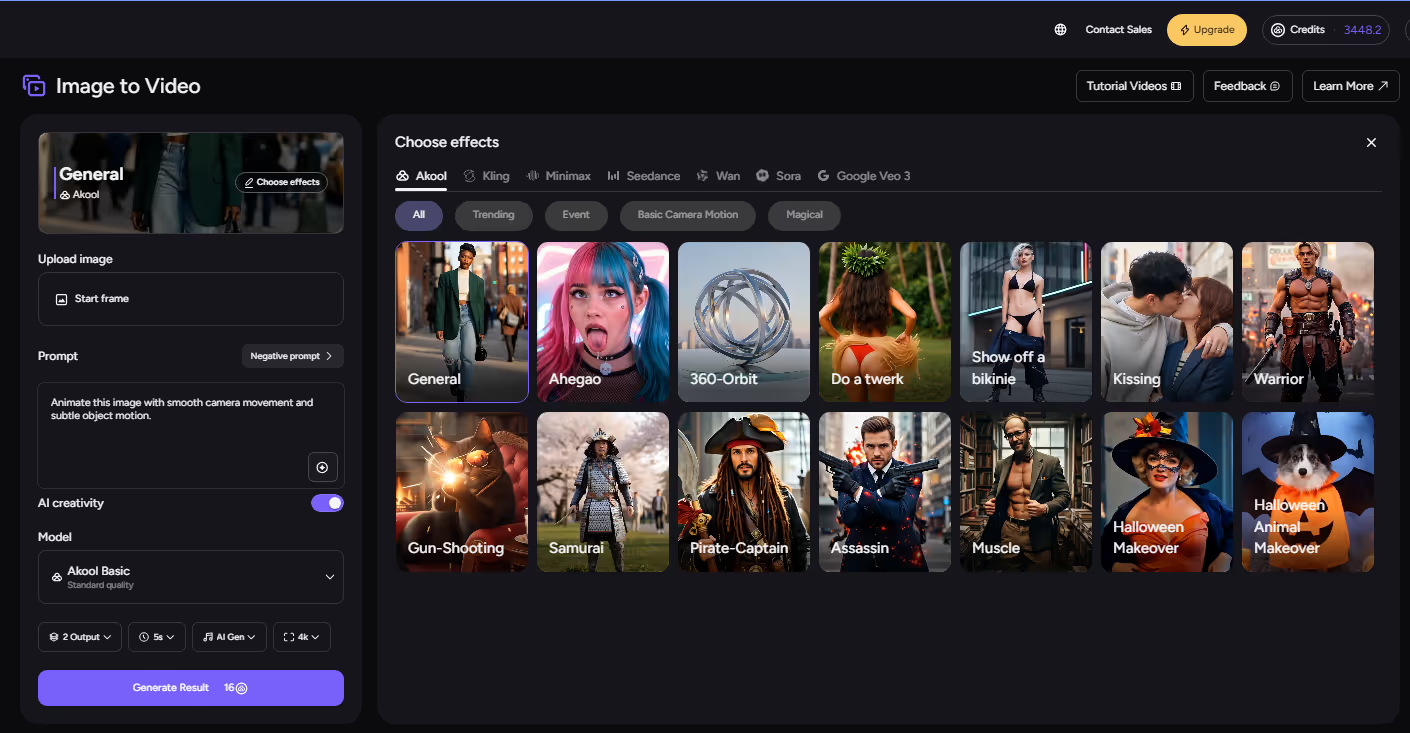

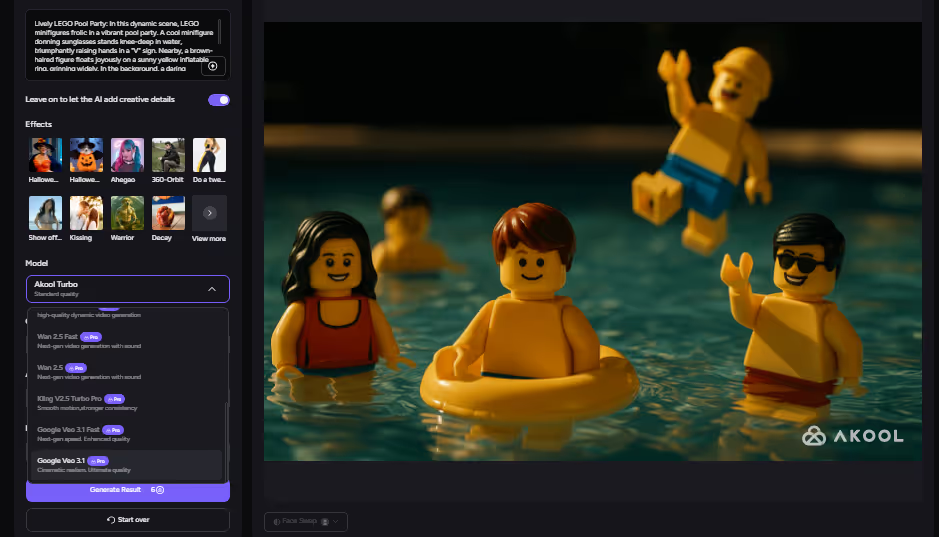

Akool has emerged as a category leader by bundling high‑fidelity generation, avatars, and enterprise features in one integrated suite.

What you’ll learn: how I2V works, model types, creative workflows, the best tools (Akool V2, Sora 2, WAN 2.2, Seedream, Nano Banana, plus Runway Gen‑2, Pika), how to choose, best practices, and future trends.

2) What Are Image‑to‑Video Models?

An image-to-video (I2V) model turns a still image into a moving video. You upload a photo, and the AI predicts realistic motion—such as camera pans, facial expressions, or environmental changes—creating short video clips that feel natural and coherent.

Unlike text-to-video, which starts from a written prompt, I2V uses a visual anchor to ensure detail consistency. It bridges the gap between static visuals and full video production, making it ideal for brand marketing, animation, design previews, or short social clips.

Key Benefits:

- Saves production time and cost

- Generates realistic motion with minimal input

- Keeps subject identity and composition intact

- Scales easily for campaigns and content creation

3) Types of Image‑to‑Video Models

Not all image-to-video generators work the same way. Several types of I2V models have emerged, each with different strengths and ideal use cases. Here we break down the major categories:

1. Single-Image Motion Models

Generate video directly from one image. Easy to use, excellent for realistic camera moves and gentle motion effects. Models like Akool’s Sora 2 and WAN 2.2 excel at maintaining subject detail while adding subtle cinematic depth.

2. Reference-Based Models

Use additional photos or motion videos as guides. Perfect for motion transfer—animating a still image based on a real performance. Ideal for character animation, dance, or lip-sync content.

3. Hybrid Text + Image Models

Accept both an image and a text prompt for precise creative control. You can direct actions (“camera pans across sunset”) or add effects (“snow falls around the character”). This is the standard for 2025’s most advanced tools like Akool, Runway, and Pika.

4. High-Speed vs. High-Fidelity

Fast modes offer instant drafts for social media; high-fidelity models prioritize cinematic detail. Creators often iterate with fast models, then finalize with 4K-quality renders using tools like Akool V2.

4) How to Use I2V for Key Creative Tasks

One of the great things about image-to-video AI is its adaptability. Whether you’re a social media creator, a brand marketer, an animator, or a concept artist, there’s a workflow to fit your needs. In this section, we’ll explain how to effectively use i2v models for a variety of creative scenarios.

Social media videos.

Start with a bold, high‑res vertical image and aim for 6–10 seconds. Use a fast mode and a single strong action—e.g., “rapid push‑in on the product, confetti burst, loop cleanly.” Keep composition, aspect ratio, and captions platform‑ready.

Product or brand showcases.

Feed studio‑quality images. Favor controlled camera orbits, slow pans, and dynamic but clean lighting. Choose high‑fidelity rendering for ads so logos, labels, and micro‑details remain sharp across frames.

Character or avatar animation.

Use clear portraits or full‑body shots. Direct facial expressions and simple gestures (“smile and wave,” “blink, look to camera”). For speech, combine with a lip‑sync or voice model. Keep clips short to minimize identity drift; chain multiple beats for longer dialog.

Cinematic or narrative storytelling.

Treat each shot like a director. Specify camera grammar (“slow dolly‑in,” “pan left across battlefield”), atmosphere (“sunset deepens”), and depth cues. Generate several short shots consistently, then edit together for longer scenes.

Concept art & prototyping.

Preserve the illustration style (“animate in the same sketch/anime look”). Explore short motions—takeoff plume, fabric sway, architectural fly‑through—to validate ideas quickly, then upscale or refine once the direction works.

General workflow: provide the best possible image, give concise visual direction, select a motion preset when available, set duration and resolution appropriate to the channel, and iterate.

5) Best Models for Image‑to‑Video Tasks (Quick Comparison)

Akool V2 anchors high‑fidelity frames; Sora 2 delivers filmic motion; WAN 2.2 excels at dynamic, believable movement; Seedream elevates look/lighting; Nano Banana keeps human faces consistent; Runway Gen‑2 is flexible and approachable; Pika is perfect for quick effects.

Quick Reviews

- Akool V2. Photoreal frames up to 4K, strong across styles, ideal in I2V pipelines where image fidelity is non‑negotiable; heavier at max settings.

- Sora 2 (OpenAI). Filmic storytelling and consistent motion with nuanced light and atmosphere; premium access and longer renders are common.

- WAN 2.2. Cinema‑grade motion, depth, and physics; 14B model delivers top detail but is compute‑intensive, smaller variants trade some fidelity for speed.

- Seedream 4.0. Style‑savvy, photoreal lighting—great for elevating per‑frame aesthetics; pair with a motion‑centric model for complex actions.

- Nano Banana. Face and identity specialist for talking heads and people‑centric clips; not intended for landscapes or product‑only scenes.

- Runway Gen‑2. Accessible text+image video; short clips with creative breadth, requires prompt iteration for precise control.

- Pika. Rapid, playful effects for 1–4s meme‑style animations; favors novelty and speed over realism or length.

6) Model Comparison & Evaluation

When choosing or evaluating image-to-video models, it’s important to consider several performance factors. Here we compare the models across key metrics:

- Output Quality: high‑end models (Akool V2/Sora 2) yield crisp, production‑ready frames; lighter/faster modes trade resolution for speed.

- Motion Realism: look for inertia, parallax, and stable subjects (WAN‑family, Sora‑class).

- Flexibility: hybrid text+image and reference‑motion support expand control; presets help non‑experts.

- Speed & Cost: fast modes and hosted GPUs accelerate iteration; quality modes add time/expense.

- Subject Consistency: critical for faces, products, and logos; choose identity‑aware models.

- Scalability: consider APIs, batch jobs, 4K output, and predictable seeds for production workflows.

Typical trade‑offs: speed vs. cinematic polish; creative freedom vs. deterministic control; generalist flexibility vs. specialist reliability.

7) Choosing the Right Model

With many models and tools available, how do you pick the right image-to-video solution for your needs? Selecting the optimal model comes down to a few key considerations. Here’s a decision-making guide to help you navigate the options:

Decision guide:

- Purpose: ad, social post, avatar explainer, cinematic beat, prototype.

- Time/Budget: fast cloud draft vs. high‑fidelity render; per‑clip costs.

- Inputs on hand: only an image, or also text, references, audio? Match model type.

- Quality bar: platform, resolution, and brand standards.

- Features: need audio, multi‑shot, real‑time avatar, or 4K?

- Scale: volume, API automation, reproducibility.

Creator Checklist

- Define goal, audience, and style.

- Pick model mode (fast vs. quality) and aspect ratio.

- Use the highest‑quality image available.

- Write a short, visual prompt (camera + motion).

- Test 2–3 quick variants; lock direction.

- Render a quality pass; check identity/logo stability.

- Add captions/audio; finalize and export.

8) Best Practices & Common Mistakes

Getting the most out of image-to-video AI isn’t just about picking the right model – it’s also about using it effectively. Here are some best practices to ensure your AI-generated videos look great, as well as common mistakes to avoid:

Do this:

- Use high‑res, well‑lit images with clear subjects.

- Keep prompts concise and visual (one action per clip).

- Leverage camera/motion presets for reliable results.

- Keep shots short to minimize drift; stitch sequences in edit.

- Maintain style and lighting consistency across a series.

- Post‑polish: stabilize, interpolate, or upscale as needed.

- Apply brand‑safety review; disclose AI use where appropriate.

Avoid this:

- Low‑quality, compressed inputs.

- Overstuffed prompts (too many actions at once).

- Pushing long single takes far beyond model limits.

- Ignoring logo/text stability on product work.

- Using likenesses without permission or policy compliance.

9) The Future of Image‑to‑Video

The pace of advancement in AI video generation is blistering, and the capabilities we see in 2025 are set to evolve rapidly in the coming years. Here are some key trends and developments shaping the future of image-to-video models, and what we can expect moving forward:

- Real‑time generation: from near‑instant drafts to live avatars and interactive streams.

- Longer, story‑driven outputs: multi‑shot coherence and memory across scenes.

- Better physics & camera realism: more accurate depth, lighting, materials, and parallax.

- Live/interactive avatars: personalized, multilingual brand ambassadors at scale.

- Convergence: integrated audio, depth/3D, and AR‑ready outputs.

- Authenticity & governance: watermarking, policy tooling, and enterprise controls.

Akool’s role: pushing high‑fidelity frames, avatar realism, multi‑model orchestration, and production‑grade features that bring these trends into everyday creative workflows.

10) Conclusion

Image‑to‑video has become a core creative superpower in 2025: it turns a single image into motion that sells, explains, and entertains—fast. You’ve seen how I2V works, the main model types, practical workflows, leading tools, selection criteria, and proven best practices. The road ahead points to real‑time, longer, more physical, and more interactive video—and Akool is helping lead that future.

Explore Akool’s AI Video Generation Suite to unlock next‑generation image‑to‑video creativity.